Software Engineering

Extensive software engineering is created by by the best software engineers for internal and external projects to improve business efficiency and productivity that enables greater profitability.

Software engineering is the systematic application of engineering approaches to the development of software. The company has over 20,000 exceptional software engineers and over 25,000 science engineers. They are responsible for the design, develop, maintain, test, and evaluate computer software. However, their skill sets extend much further. The company's software engineers are the core of the innovation that is provided through the operations of its subsidiaries and development of new products and application. Artificial Intelligence Deep Machine Learning is used throughout the Company on all projects.

The company provides one hour each day to learn new skill sets and security awareness. The majority of our software engineers work with the business unit to develop new business applications. Multiple programming languages are used, such as .Net, C, C#, C++, Python, Java, JavaScript, Kotlin, Go, Scala, Rust, PHP, SQL, Dart, Fortran and others. AI-ML is a major area focused upon.

The company’s IT infra-structure is administered by very few IT professionals. They work as a team to maintain and administer the network, databases, storage, systems, platforms, security, virtualization, cloud and other IT functions. New personnel are brought into the team for an apprentice training and experienced personnel are given other challenging opportunities, such as in DevOps and AI-ML. The continuous training and experience gained by working in other areas has enabled the IT staff to acquire multiple skill sets.

The Customer Contact Center (CCC) software platform project is an example of the complex application of artificial intelligence, machine-learning and large structure and big data. The company is building CCC platform that uses and AI avatar that can understand voice recognition and examine large technical and business knowledge databases to answer all technical and business inquiries. The Ai avatar can be seen as a live person that inter-acts with the client using the image and voice of another person who may not even exist anymore. This will be used to replace over 800 CCC people that currently work for the company. This complex software engineering project is now in the beta testing phase.

Containers

Docker Containers along with Kubernetes are used primary for DevOps. Kubernetes works well for scaling and managing applications in containers. The orchestration allowed by Kubernetes is used in many mission critical Docker applications. The programming teams are able to learn how to used Docker containers and Kubernetes within a week and begin to build applications rapidly. It is important to understand that the difference between virtual machines and containers.

Moreover, our data scientists especially like using the clustering feature that is able to accommodate large amounts of data.

Docker is an open source containerization platform. It enables developers to package applications into containers—standardized executable components combining application source code with the operating system (OS) libraries and dependencies required to run that code in any environment. Kubernetes is an open-source container orchestration platform that enables the operation of an elastic web server framework for cloud applications. Kubernetes can support data center outsourcing to public cloud service providers or can be used for web hosting at scale. A fundamental difference between Kubernetes and Docker is that Kubernetes is meant to run across a cluster while Docker runs on a single node. Kubernetes is more extensive than Docker Swarm and is meant to coordinate clusters of nodes at scale in production in an efficient manner. Jenkins is often used with Docker but there are significant differences.

These boots were made for walking

Docker is a container engine that can create and manage containers, whereas Jenkins is a CI engine that can run build/test on your app. Docker is used to build and run multiple portable environments of your software stack. Jenkins is an automated software testing tool for your app. Jenkins is also different from Kubernetes. Jenkins is an automated software testing tool for your app. In comparison, Kubernetes is a system for automating deployment, scaling, and management. In short, the entire orchestration of containerized applications

There are many benefits of using containers. First, DevOps teams benefit greatly due to the more efficient approach to software development. Containers allow engineering teams to be more agile by reducing wasted resources and empowering teams to build and share code more rapidly in the form of microservices. On top of this, containerization improves scalability through a more lightweight and resource-efficient approach. This improved scalability, coupled with the improvements in development efficiency and velocity, results in greater time and cost efficiencies. Containers are highly flexible and scalable. With containers, we can leverage more processes on each virtual machine and increase its efficiency. We can also run any sort of workload within a container because it is isolated, which ensures that each workload is protected from the others. As a result, a container cannot destroy or impact another container.

Triumphant March of Aida

Ease of use is another core benefit of containers. Similar to how virtual machines were easier to create, scale, and manage compared to physical hardware, containers make it even easier to build software than virtual machines because they can start up in a few seconds. Gone are the days of running the heavy load of processes required by a full virtual machine. Instead, a container affords us the luxury of running a light[-weight, isolated process on top of our existing virtual machine. This allows us to quickly and easily scale without getting bogged down with DevOps busy work.

Blockchain

Although almost all data is encrypted, Blockchain is used by the company for security of data records. A blockchain is a distributed database that is shared among the nodes of a computer network. As a database, a blockchain stores information electronically in digital format. Blockchains are best known for their crucial role in cryptocurrency systems, such as Bitcoin, for maintaining a secure and decentralized record of transactions. The innovation with a blockchain is that it guarantees the fidelity and security of a record of data and generates trust without the need for a trusted third party.

One key difference between a typical database and a blockchain is how the data is structured. A blockchain collects information together in groups, known as blocks, that hold sets of information. Blocks have certain storage capacities and, when filled, are closed and linked to the previously filled block, forming a chain of data known as the blockchain. All new information that follows that freshly added block is compiled into a newly formed block that will then also be added to the chain once filled.

A database usually structures its data into tables, whereas a blockchain, like its name implies, structures its data into chunks (blocks) that are strung together. This data structure inherently makes an irreversible time line of data when implemented in a decentralized nature. When a block is filled, it is set in stone and becomes a part of this time line. Each block in the chain is given an exact time stamp when it is added to the chain. Some of the best feature of blockchain include:

- Blockchain is a type of shared database that differs from a typical database in the way that it stores information; blockchains store data in blocks that are then linked together via cryptography.

- As new data comes in, it is entered into a fresh block. Once the block is filled with data, it is chained onto the previous block, which makes the data chained together in chronological order.

- Different types of information can be stored on a blockchain, but the most common use so far has been as a ledger for transactions.

- Blockchain is used in a decentralized way so that no single person or group has control—rather, all users collectively retain control.

- Decentralized blockchains are immutable, which means that the data entered is irreversible. This means that transactions are permanently recorded and viewable to anyone.

The goal of blockchain is to allow digital information to be recorded and distributed, but not edited. In this way, a blockchain is the foundation for immutable ledgers, or records of transactions that cannot be altered, deleted, or destroyed. This is why blockchains are also known as a distributed ledger technology (DLT).

Imagine that a company owns a server farm with 10,000 computers used to maintain a database holding all of its client’s account information. This company owns a warehouse building that contains all of these computers under one roof and has full control of each of these computers and all of the information contained within them. This, however, provides a single point of failure, such as if the electricity at that location goes out, or its Internet connection is severed, or if it burns to the ground, or if a bad actor erases everything with a single keystroke. The data is either lost or corrupted.

Sweet dreams are made of this

Blockchain allows the data held in that database to be spread out among several network nodes at various locations. This not only creates redundancy but also maintains the fidelity of the data stored therein. If somebody tries to alter a record at one instance of the database, the other nodes would not be altered and thus would prevent a bad actor from doing so. If one user tampers with a record of transactions, all other nodes would cross-reference each other and easily pinpoint the node with the incorrect information. This system helps to establish an exact and transparent order of events. This way, no single node within the network can alter information held within it.

Because of this, the information and history of transactions of are irreversible. Such a record could be a list of transactions, but it also is possible for a blockchain to hold a variety of other information like legal contracts, state identifications, or a company’s product inventory.

To validate new entries or records to a block, a majority of the decentralized network’s computing power would need to agree to it. To prevent bad actors from validating bad transactions or double spends, blockchains are secured by a consensus mechanism such as proof of work (PoW) or proof of stake (PoS). These mechanisms allow for agreement even when no single node is in charge.

To validate new entries or records to a block, a majority of the decentralized network’s computing power would need to agree to it. To prevent bad actors from validating bad transactions or double spends, blockchains are secured by a consensus mechanism such as proof of work (PoW) or proof of stake (PoS). These mechanisms allow for agreement even when no single node is in charge.

The records stored in the blockchain are encrypted. This means that only the owner of a record can decrypt it to reveal their identity (using a public-private key pair). As a result, users of blockchains can remain anonymous while preserving transparency.

Blockchain technology achieves decentralized security and trust in several ways. To begin with, new blocks are always stored linearly and chronologically. That is, they are always added to the “end” of the blockchain. After a block has been added to the end of the blockchain, it is extremely difficult to go back and alter the contents of the block unless a majority of the network has reached a consensus to do so. That’s because each block contains its own hash, along with the hash of the block before it, as well as the previously mentioned time stamp. Hash codes are created by a mathematical function that turns digital information into a string of numbers and letters. If that information is edited in any way, then the hash code changes as well.

For example, suppose hackers, who also runs a node on a blockchain network, and wants to alter a blockchain and steal information from everyone else. If they were to alter their own single copy, it would no longer align with everyone else’s copy. When everyone else cross-references their copies against each other, they would see this one copy stand out, and that hacker’s version of the chain would be cast away as illegitimate.

Succeeding with such a hack would require that the hacker simultaneously control and alter 51% or more of the copies of the blockchain so that their new copy becomes the majority copy and, thus, the agreed-upon chain. Such an attack would also require an immense amount of money and resources, as they would need to redo all of the blocks because they would now have different time stamps and hash codes.

Due to the size of many networks and how fast they are growing, the cost to pull off such a feat probably would be insurmountable. This would be not only extremely expensive but also likely fruitless. Doing such a thing would not go unnoticed, as network members would see such drastic alterations to the blockchain. The network members would then hard fork off to a new version of the chain that has not been affected.

Currently, tens of thousands of projects are looking to implement blockchains in a variety of ways to help society other than just recording transactions—for example, as a way to vote securely in democratic elections. The nature of blockchain’s immutability means that fraudulent voting would become far more difficult to occur. For example, a voting system could work such that each citizen of a country would be issued a single cryptocurrency or token. Each candidate would then be given a specific wallet address, and the voters would send their token or crypto to the address of whichever candidate for whom they wish to vote. The transparent and traceable nature of blockchain would eliminate both the need for human vote counting and the ability of bad actors to tamper with physical ballots.

Integration and Automation

An important IT strategy policy is to integrate and automate everything and embed AI-ML into the processes. Integration is the process of combining two or more things to create a whole. For business units, software system integration means bringing together multiple business systems to operate as a collaborative unit. IT integration provides a progressive form of organizing social production, based on technological and organizational unification of different production processes in a single enterprise. Integration allows information to be shared between the connected systems. These system integrations can come in many forms, whether it be requesting information from a website, internal employee systems sending and receiving information, or connecting customer data from a point of sale system to CRM to automate recommendations.

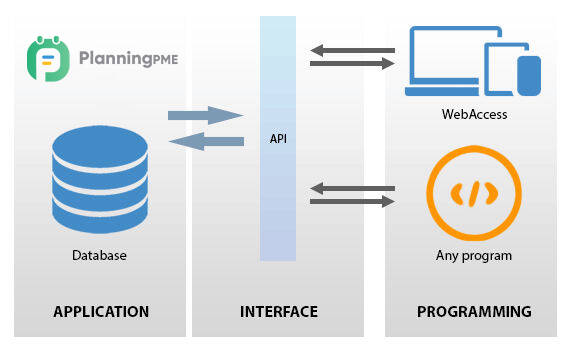

Application Programming Interfaces (API)

API integration is the connection between two or more applications via their APIs (Application Programming Interfaces) that allow systems to exchange data sources. API integrations power processes throughout many sectors and layers of an organization to keep data in sync, enhance productivity and drive revenue.

API is an interface and is part and parcel of almost everything in the digital world. No matter the business and the size of the enterprise, APIs enable seamless operation and performance of applications and web systems.

APIs are needed to achieve optimal and automated business processes and procedures that interact and share critical data. API integrations are critical for digital transformation domain. API integration is the connection between two or more applications via their APIs (application programming interfaces) that allow systems to exchange data sources. API integrations power processes throughout many sectors and layers of an organization to keep data in sync, enhance productivity and drive revenue.

API connectivity helps applications share data and communicate with each other without human interruption. You enable communication between two web tools or applications through their APIs. It allows organizations to automate systems, enhance the seamless sharing of data, and integrate current applications.

Enterprises cannot overlook the importance of API integration in the modern world. After the explosion of cloud-based products and apps, organizations must create a connected system where data is relayed automatically between various software tools. API integration makes that possible as it allows sharing process and enterprise data among applications in a given ecosystem.

It unlocks a new level of flexibility of information and service delivery. It also makes the embedding of content from different sites and apps easy. An API acts as the interface that permits the integration of two applications.

E-commerce sites are among the most significant users of API integrations. Web stores have order management systems that process shopping and shipping orders. But to process them, they need to access servers and databases which include customers, products, and inventory levels. There is an ongoing data interchange, which connects the online store to the shopping cart. Enterprises achieve this through API integration.

The payment gateway integration is another example. As a consumer, you don’t see the actual transaction when paying for a product online. But in the background, there is data transfer for verification of your credit card/debit card details. The payment gateway API is integrated into the web store and often hidden out of sight.

There are several ways to achieve API integration. They significantly depend on the individual needs of the system or business. Connector applications are designed to facilitate the data transfer between two well-known software platforms. Connectors are affordable, make it quicker to deploy standard API solutions and make integrations easier to manage and maintain. They also reduce the need for API management. These are typically SaaS applications dedicated to developing API integrations that help join other SaaS applications and systems.

They support a certain level of flexibility as you can build custom integration apps using robust tools available in the market. Some can fetch and transform data into a form that your app can understand with just a single query. It permits requests to different private and public APIs as well as databases simultaneously.

There are several ways to achieve API integration. They significantly depend on the individual needs of the system or business. Custom-built integrations involve hand-written scrip from a software developer with a deep understanding and knowledge of the API documentation. This technique became popular some years back, but the cost of custom development (and ongoing maintenance) has made it less attractive against newer integration methods. It can also be very time consuming to take this approach.

There are several benefits of API integration that include:

Automation - API integration allows the handoff of information and data from one application to the next automatically. Successful automation helps eliminate the manual (human) component, which saves time and dramatically reduces errors.

Scalability - API integration allows businesses to grow since they don’t need to start from scratch when creating connected systems and applications.

Streamlined Visibility/Communication/Reporting - API integration allows you end-to-end visibility of all systems and processes for improved communication and reporting. With a streamlined approach, you can track and monitor data effectively, thereby creating robust reports based on specific and comprehensive datasets.

Reduces Errors - API integration allows the transfer of complex and voluminous data with reduced errors and inadequacies.

Robot Process Automation

Robotic process automation (RPA) is a software technology that makes it easy to build, deploy, and manage software robots that emulate humans actions interacting with digital systems and software.

RPA is used to automate various supply chain processes, including data entry, predictive maintenance and after-sales service support. RPA is used across industries to automate high volume, rote tasks. Telecommunications companies use RPA to configure new services and the associated billing systems for new accounts.

Robotic process automation (RPA) is a software-based technology utilizing software robots to emulate human execution of a business process. This means that it performs the task on a computer, uses the same interface a human worker would, clicks, types, opens applications and uses keyboard shortcuts.

Smart Process Automation.

Smart Process Automation is a logical evolution of RPA Technology where Robots utilize AI and ML to perform cognitive tasks. Using technologies such as Machine Learning and Natural Language Processing (NLP), it is possible that an algorithm can extract the relevant data points and understand/process them contextually. Avant-Garde-Technologies primarily focuses on smart process automation in all its IT infra-structure.

Robotic Process Automation (RPA) is a software that is used to automate a high volume of repetitive and rule-based tasks. RPA tools allow users to design and deploy software robots that can mimic human actions. These tools also utilize pre-defined activities and business rules to autonomously execute a combination of tasks, transactions, and processes across software systems. RPA can deliver the desired result without human interaction.

Traditional Automation is the automation of any repeated tasks. It combines application integration at a database or infrastructure level. It requires minimal human intervention.

IT process automation (ITPA) is a series of processes which facilitate the orchestration and integration of tools, people and processes through automated workflows. ITPA software applications can be programmed to perform any repeatable pattern, task or business workflow that was once handled manually by humans.

Service Automation.

Service automation is the condensation of many human-centric services into a streamlined, software-based online platform. It is services that maintain the integrity of what service is while automating background tasks to provide a seamless user experience

To automate processes 8 steps of workflow must be addressed.

- Identify the process owner.

- Keep the 'Why' in mind.

- Get the history.

- Diagram the workflow.

- Gather data about the unautomated process.

- Talk with everyone involved in the workflow.

- Test the automation.

- Go live.

Characteristics of Robotic Process Automation (RPA)

· It does not require any modification in the existing systems or infrastructure.

· It can automate the repetitive, rule-based tasks. It mimics human actions to complete the tasks.

· A user can start using RPA without knowing any programming. RPA allows automation with easy to use flowchart diagram. Therefore, users do not require to remember language syntax and scripting. They only need to focus on the functionalities given under automation.

· RPA provides the easy and quick implementation. It requires less amount of time as RPA software is process-driven.

· RPA allows users to assign work to hundreds or thousands of virtual machines that can perform the allotted tasks without the requirement of physical machines.

· RPA can be configured to meet the requirements of a particular user. It can be combined with several applications (e.g., calendar, e-mail, ERP, CRM, etc.) to synchronize information and create automated replies.

· RPA can be a little costly in the initial phase. But it saves a lot of time, money, and effort in the long run.

· RPA is a more efficient option since it can make improvements instantly.

· With RPA, users can easily update any business flow due to its simplicity.

Characteristics of Traditional Automation

· It requires certain customizations in the existing IT infrastructure.

· It does not include the ability to mimic human actions. It only executes the pre-defined programmatic instructions.

· Users are required to have the programming skills to use Traditional Automation for automating functionalities. Programming language requirement depends upon the type of automation tool. Users need to remember language syntax and scripting.

· Traditional Automation can take several months for implementation. Test designing and feasibility studies take a longer time.

· On the other hand, Traditional Automation uses different programming techniques to achieve parallel execution or scalability. Physical machines are required to perform parallel execution. Those physical machines should have the capability of providing good processing speed.

· When it comes to customization, Traditional Automation is considered as a critical and complex technology compared to the RPA. The integration of different systems with Traditional Automation is a challenge due to the limitations of APIs.

· Traditional Automation is cheaper in the initial phase. However, it costs a lot more in the long run.

· Traditional Automation requires more time, effort, and a considerable workforce.

· On the other hand, Traditional Automation may force users to change various scripts. Hence, maintenance and updating of this technology can be tough.

Vulnerability Assessment

Vulnerability assessment tools are designed to automatically scan for new and existing threats that can target your application. Types of tools include: Web application scanners that test for and simulate known attack patterns. Protocol scanners use search for vulnerable protocols, ports and network services. All software under extensive design before anything else is done. After the DevOps is developed it undergoes vulnerability assessment and penetration testing before it is released.

Vulnerability Assessment and Penetration Testing (VAPT) are two types of vulnerability testing. The tests have different strengths and are often combined to achieve a more complete vulnerability analysis. In short, Penetration Testing and Vulnerability Assessments perform two different tasks, usually with different results, within the same area of focus.

Vulnerability assessment tools discover which vulnerabilities are present, but they do not differentiate between flaws that can be exploited to cause damage and those that cannot. Vulnerability scanners alert companies to the preexisting flaws in their code and where they are located. Penetration testing attempts to exploit the vulnerabilities in a system to determine whether unauthorized access or other malicious activity is possible and identify which flaws pose a threat to the application. Penetration tests find exploitable flaws and measure the severity of each. A penetration test is meant to show how damaging a flaw could be in a real attack rather than find every flaw in a system. Together, penetration testing and vulnerability assessment tools provide a detailed picture of the flaws that exist in an application and the risks associated with those flaws.

Vulnerability Assessment and Penetration Testing (VAPT) provides enterprises with a more comprehensive application evaluation than any single test alone. Using the Vulnerability Assessment and Penetration Testing (VAPT) approach gives an organization a more detailed view of the threats facing its applications, enabling the business to better protect its systems and data from malicious attacks. Vulnerabilities can be found in applications from third-party vendors and internally made software, but most of these flaws are easily fixed once found.

Vulnerability Assessment and Penetration Testing (VAPT) platform finds flaws that could damage or endanger applications in order to protect internal systems, sensitive customer data and company reputation. Having a system in place to test applications during development means that security is being built into the code rather than retroactively achieved through patches and expensive fixes.

Both dynamic and static code analysis are performed to identify flaws in code and to determine if there are any missing functionalities whose absence could lead to security breaches. For example, Vulnerability Assessment and Penetration Testing (VAPT) platform can determine whether sufficient encryption is employed and whether a piece of software contains any application backdoors through hard-coded user names or passwords penetration testers and developers to spend more time remediating problems and less time sifting through non-threats.

Open Source vs Commercial Software

The Company uses both open source and commercial software. Open source software is preferred over commercial software, because it normally contain more recent features and it costs much less. The licensing for commercial software is very vague and often difficult to measure. Given the choice between open software with less features, but with lower cost, the open source software would be selected. Before software is purchase and deployed, an extensive ROI analysis is done. The purchase decision is normally based on ROI or security factor.

Enhancing software purchased with DevOps, Automation, AI-ML

Almost all software purchased is modified to integrate into the company’s IT infra-structure. Processes are automated as much as possible and AI-ML is used to make decisions. This allows for much fewer people to intervene in most business processes.

The company operates multiple Security Operations Centers (SOC) in different geographic regions. They are operated 7 days per week, 24 hours per day with over 30 personnel. They are so proficient along with the security software and AI-ML used, that a breach in security can be detected and remediated within one minute. The deployment of SOC is an intrinsic part of the policy of defense in depth, since monitoring insures that IT breaches can be identified and stopped within minutes.